IBM’s revelation at SC09 created quite a stir and immediately brought forth visions of Cylons and Hal 9000.

The cognitive computing team at IBM Research has moved significantly forward in creating a large-scale cortical simulation and a new algorithm that synthesizes neurological data — two major milestones on the path to a cognitive computing chip. IBM says computers that mimic the human brain are just 10 years away.

This is not a mere advancement on the artificial intelligence (AI) scale. This is a different approach to computing.

“Non-cognitive computing approaches to artificial intelligence have generally led to underwhelming results: We have nothing near HAL-9000 level capabilities even after many years of trying, so there has been increasing attention on cognitive computing approaches,” Steven Flinn, chief executive officer of ManyWorlds and author of the upcoming book The Learning Layer: Building the Next Level of Intellect in Your Organization, told TechNewsWorld.

AI vs. Cognitive Computing

Cognitive computing isn’t your father’s artificial intelligence which is to say it isn’t just a new model of an old idea.

“Cognitive computing goes well beyond artificial intelligence and human-computer interaction as we know it — it explores the concepts of perception, memory, attention, language, intelligence and consciousness,” Dharmendra Modha, manager of cognitive computing at IBM Research – Almaden, told TechNewsWorld.

“Typically, in AI, one creates an algorithm to solve a particular problem,” Modha said. “Cognitive computing seeks a universal algorithm for the brain. This algorithm would be able to solve a vast array of problems.”

Still confused? Then try looking at it as the difference between learning and thinking.

“AI attempts to have computers learn how to learn — for computers to make their own connections without only being constrained by their hardware and software,” said Daniel Kantor, M.D., BSE, medical director of Neurologique and president-elect of the Florida Society of Neurology.

“A cognitive computer could quickly and accurately put together the disparate pieces of any complex data puzzle and help people make good decisions rapidly,” he told TechNewsWorld.

“The problem with this is that even the human brain is unable to do that,” Kantor laughed. “These two technologies could be used in tandem to complement each other if the algorithms are sophisticated enough.”

Aiming for Human, Landing on Cat Feet

Cognitive computing is a different beast entirely — and right now it’s looking very cat-like.

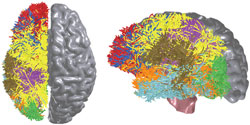

Scientists at IBM Research, in collaboration with colleagues from Berkeley Lab, have actually performed the first near real-time cortical simulation of the brain that exceeds the scale of a cat cortex and contains 1 billion spiking neurons and 10 trillion individual learning synapses.

Cat? How did the scientists end up exceeding the scale of cat but falling short of human rungs?

“This was the best that we could achieve given the available supercomputing resources,” explained Modha. “Specifically, using DAWN Blue Gene / P supercomputer at Lawrence Livermore National Lab with 147,456 processors and 144 TB of main memory, the simulation used 1.6 billion neurons and 9 trillion synapses.”

For some scale reference points, consider this:

- A cat cortex has roughly 760 million neurons and 6 trillion synapses. So, IBM’s simulation exceeds cat-scale.

- A monkey cortex has roughly 2 billion neurons and 20 trillion synapses. IBM’s simulation is roughly equal to 50 percent of monkey-scale.

- A human cortex has 22 billion neurons and 220 trillion synapses. IBM’s simulation, then, is 4.5 percent of human-scale.

“If we have access to a supercomputer with 1 exaflops of computation and 4 petabytes of main memory, a near real-time human cortex-scale simulation would be possible,” said Modha.

Elements of Cognitive Computing

Essentially, the scientists created a new algorithm called “BlueMatter” that exploits the Blue Gene supercomputing architecture in order to measure and map the human brain. It is noninvasive, meaning subjects’ skulls are not drilled into, and there are no talking human heads in bell jars a la mad scientist horror movies.

Instead, the scientists use magnetic resonance diffusion weighted imaging to measure and map the connections between all cortical and subcortical locations within the living brain.

The resulting wire diagram untangles the brain’s communication network and helps scientists understand how the human brain represents and processes information in a tiny space and with little energy burn.

The implications are huge — so much so that all possible uses have yet to be imagined.

“In the future, businesses and individuals will simultaneously need to monitor, prioritize, adapt and make rapid decisions based on ever-growing streams of critical data and information,” explained IBM’s Modha.

“A cognitive computer could quickly and accurately put together the disparate pieces of this complex puzzle and come to a logical response, while taking into account context and previous experience,” he said.

“It could have implications for mining live, streaming data from sensor networks, macro- and micro-economic data analysis and trading in a financial setting, understanding live audio and video feeds, and even the gaming industry,” suggested Modha.

“It would have the ability to point out anomalies, deal with constantly changing parameters, and possibly prioritize what to look at first in the data,” he concluded.

Human Brain Add-On

The simulator is also an important tool for scientists to test their hypotheses for how the brain works.

“One of our highest goals in neuroengineering is to develop circuitry that can mimic the human brain, not only for a certain discrete action, but to mimic the thought process in general,” explained Neurologique’s Kantor.

“Such technology would allow us to use microchips [or] nanochips to augment brain function in someone who has suffered a brain injury,” he said.

“It is entirely feasible that we will see such technology in the next decade, but it may not be applicable to many types of brain injuries, cautioned Kantor.

“Often damage to the brain — from disease, traumatic brain injury or a direct blow to the head — destroys part of a neural circuit, but leaves other parts in place,” he pointed out. “This means that it would be more useful to have artificial neurons that can grow and make synaptic connections with other healthy neurons to reform a circuit.”

Cognitive computing mimics the human brain by using hardware, software and by mapping or augmenting wetware.

To sum it up: Cylons and Hal are out, and Johnny Mnemonic is in.