Ninety-one percent of Americans believe they have lost control of their personal information — and many also don’t trust companies that buy, sell, barter, and combine their habits and activities to better “serve” — aka “manipulate” — them, a recent Pew Research survey found.

Along similar lines, they don’t particularly trust governments either, as they monitor communications and movement.

This distrust is becoming a big deal.

Edward Snowden shone a spotlight on how the war on terror has been undermining the foundation of a free America through NSA and other government activities — how, even as the NSA protects America, it erodes what makes America great in the first place. This is the fundamental challenge of our time — how to protect freedom, opportunity and our country without destroying America’s strength, ingenuity, passion, and work ethic through the creation of an all-knowing surveillance state.

It turns out that Apple, under Tim Cook’s leadership, is doing a great service for American values, both at home and abroad. Apple is holding up its products as a better way to do business, as well as working to ensure that its iPhones are secure and private — from criminals and hackers, certainly, but maybe even from the good-intentioned law enforcement world as well.

Enforcement vs. Education

The goal of law enforcement is to enforce the law and deter crime — usually through punishment or the threat of such punishment. The problem is that although overzealous surveillance can achieve a desired outcome — reduce or eliminate crime — it comes with an extraordinarily high cost to humanity: a reduction in creativity, productivity and maybe even joy. Worse, I believe too much oversight results in a dangerous drop in human empathy.

Parents know this intuitively. Parents could wire up their entire home with surveillance cameras, for example, and keep a running feed of their kids’ activities. Every time a sister bashed her brother over the head with a doll, there could be instant and just punishment.

The correlation would become clear, but the outcome would be to stifle impulses and decision making. The young girl would learn that if she hits her brother, then she will get punished. She would not learn, for example, that hitting hurts her brother — and that’s not a good thing.

Ultimately, the kid would learn not to hit to avoid punishment, instead of learning not to hit because it’s bad for her relationship with her brother and causes him pain, which only matters if the child develops some sort of human sense of empathy in the first place.

It gets worse. If a kid takes a cookie and gets punished, the option of taking a cookie gets removed. If the thought of taking a cookie is removed, the kid isn’t gong to think about taking a cookie or what the consequences might be. The choice to ask, to choose a healthier food, to simply reason through a cause-and-effect situation — all that’s gone.

Even though parents sometimes reach their wits’ end trying to figure out which child took a toy from the other first and who escalated the conflict by biting, they also know that video monitoring their children is a bad thing.

Meanwhile, the world’s population is constantly getting bigger, but Earth is not. Empathy and human understanding will, in the long run, do more for the world than creating a series of impenetrable countries where people’s thoughts and actions are directed down increasingly narrow tunnels. Freedom is connected to opportunity and both are connected to creativity — as well as to the American drive to build, invent and excel.

A sense of privacy is important, because it’s connected to a sense of freedom, which is connected to the American spirit. If you mess with privacy, you start messing with a fundamental element of American spirit. I don’t think most Americans know how to articulate why they’re worried about their privacy — because most are law-abiding citizens.

The argument, “If you have nothing to hide, why do you care if anyone looks and watches?” doesn’t hold up, but it’s not because Americans are planning to become criminals. No, it doesn’t hold up because most of us sort of know that an erosion of privacy also eats away at the Americans we are inside.

Enter Tim Cook and Apple

Apple is not participating in the vast information-gathering machine that takes data points from consumers and combines them with data from other companies and other agencies, and creates incredibly detailed profiles about who you are and how you likely will act when presented with certain options.

And Apple could do this. Apple could collect and aggregate its customer data and immediately create a multibillion-dollar information-based business to sell to advertisers, to insurance companies, to service providers, to casinos, to banks.

But Apple is not doing this, and I respect that.

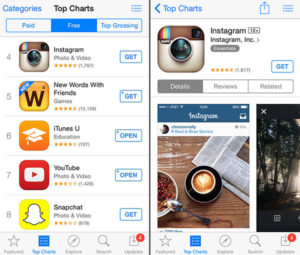

In fact, Apple is bucking trends and pressure in order to deliver the best possible product. Apple could have created Apple Pay in such a way that Apple Pay would reward merchants with data and “loyalty” programs. Instead, Apple is not going to get in the middle of how merchants push customers into loyalty programs or try to influence buying behavior.

Apple Pay doesn’t fix this — for example, even if you pay with cash, at many stores you can’t get the lower price unless you swipe your store loyalty card to help build a store’s customer profile database — but Apple isn’t making it any easier on merchants.

Cook spoke about Apple Pay during a Q&A interview at theWSJD Live global technology conference in October.

“I think it’s the first and only mobile payment system that is both easy, private and secure — so I think we hit on all three key points that the customer cares about,” he said.

“I think merchants have different objectives sometimes, but in the long arc of time you are only relevant as a retailer or a merchant if your customers love you,” Cook added. “We’ve said up front, we’re going to be very different on this. We don’t want to know what you buy, when you buy it. We don’t want to know anything — and so we’re not collecting your data. We’re not Big Brother.”

This is fantastic. Then later, privacy and security came up once again (around the 24-minute mark).

“What our value system is… we believe that your data is yours, and that we’re not about collecting every detail about you and knowing what time you go to bed and where you spend your money and what the temperature is in your house and what things you searched on. None of that. We don’t read your email, we don’t read your iMessages, we don’t do any of this. And so we’ve designed — we did this years ago — we designed iMessage such that we don’t keep any of it. And so if somebody comes to get it — a bad guy or government — we can’t provide it,” Cook explained.

“If somebody tries to get your FaceTime records, we can’t supply it. With iOS 8, we added some things to that to further encrypt it that puts the user in charge. And there’s been some comments from some law enforcement types that said, ‘Hey, this is not good, we don’t have the flexibility we did before.’

“I look at that and say, ‘Look, if law enforcement wants something they should go to the user and get it. It’s not for me to do that.’ Also, by the way, I wouldn’t ever do this, but if you design something where the key is under the mat, the bad guy can get that, too. It’s just not the good guy,” he said.

Cook believes Apple’s customers want more private devices and that they don’t even want Apple harvesting their data — so Apple isn’t doing that.

“What you do should be clear,” he said. “People should understand what you’re doing and what the implications are.”

Cook as Privacy Hero?

What this means to me is that at the very top of Apple, Cook places a premium on customer trust — and he’s willing to talk about it. He’s willing to go on record with The Wall Street Journal and say that Apple is not using your iPhone to harvest data and built a vast treasure trove of information that can be used surreptitiously to manipulate your choices and actions.

Apple’s business is creating a fantastic product that you’ll buy because it’s a great product. As a business tactic, this is critical — Apple can’t expect to sell Apple Watches with health apps and deliver a HomeKit-based home automation hub if consumers think everything is logged for future manipulation.

So Cook is making the right business move — but I also believe that he believes it’s the right thing to do, too. And I respect that more than the smart business move.

There’s more, though.

What I find incredibly important is that China eventually will deliver Apple’s largest revenue stream — and China’s system of government and business models care little for privacy, freedom, and what an inventive human can bring to the world.

By creating an encrypted iPhone that Apple and governments can’t easily break open, Cook is at once making a savvy business decision — Apple can’t sell iPhones abroad if countries believe they’re just backdoor keys for the NSA — but also pushing forward an even more important agenda that illustrates how you can run a business by building a product (and not by creating a user base as a product to sell to other sorts of customers).

In fact, after learning last month that China allegedly was using hacking tactics to get Apple ID data from its own Chinese users, Cook flew to China to meet with a top Chinese government official.

If Cook can use his position at Apple, as well as his own personal beliefs, to influence China to better tolerate freedom in a positive way, I like that — even if the most callous watcher simply believes that Cook is just trying to protect Apple so it can continue to sell iPhones in China without users worrying about security.

As for privacy, one attendee at the WSJ event asked Cook what might change things — what might be the tipping point that causes most people to recognize what some companies are doing with our data?

“I think it will take some kind of event — because people aren’t shaken until something major happens, and when that happens, everybody wakes up and says, ‘Oh my God,’ and they make a change. And so what that event is, I don’t know,” Cook said. “But I’m pretty convinced that it’s going to happen — it’s just a matter of what, and when it occurs.”