Google researchers last month reported progress in advancing the image classification and speech recognition capabilities of artificial neural networks.

Image classification and speech recognition tools are based on well-known mathematical methods, but why certain models work while others don’t has been hazy, noted software engineers Alexander Mordvintsev and Mike Tyka, and software engineering intern Christopher Olah, in a blog post.

To help unravel the mystery, the team trained an artificial neural network by showing it millions of images — “training examples” — and gradually adjusting the parameters until the network was able to provide the desired classifications.

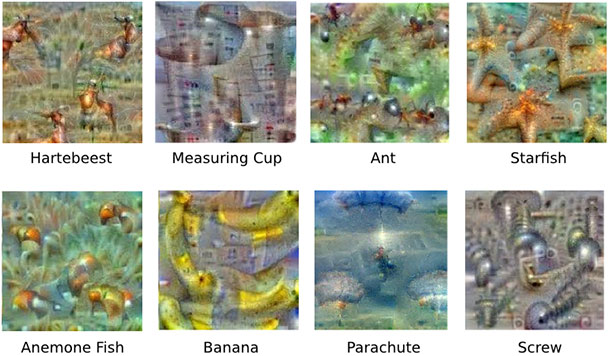

They applied the process, dubbed “inceptionism,” to 10 to 30 stacked layers of artificial neurons. The team provided an image, layer by layer until the output layer was reached. The final layer provided the network’s answer.

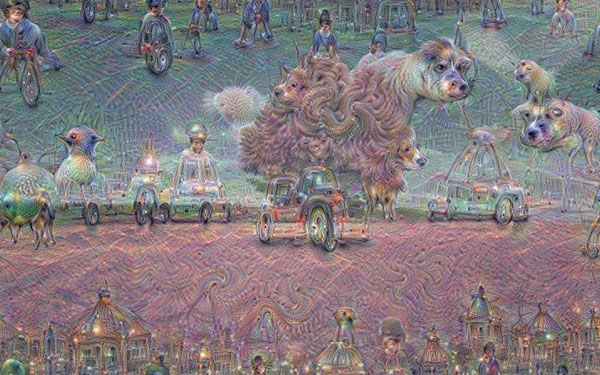

The software was able to build up an idea of what it thought the object should look like. The results, in a word, were surprising. Instead of producing something resembling actual objects, the network added components and refined the images in ways that often resembled modern art.

Creative and Original Thought

The research was important because it enabled training of the network, the Google team noted. In some cases, it allowed the researchers to understand that what the neural net was looking for was not the thing they expected.

Moreover, the team discovered that each layer dealt with features at a different level of abstraction, which often resulted in complexity, depending on which layers were enhanced.

The inceptionism techniques could help researchers understand and even visualize how neural networks are able to carry out various classification tasks. A better understanding of how the network learns through the training process could lead to improvements in network architecture.

“This is about creativity, coming up with something new from complete randomness,” said Roger Entner, principal analyst at Recon Analytics.

“This is how we are able to give the computer’s AI a new idea,” he told TechNewsWorld.

“We basically begin with something almost random and impose our order, and it — and through this, it is able to create something new,” Entner explained. “In this way, it is about original thought — creativity and original thought.”

Not So Abstract

One unforeseen benefit of the study could be the development of a new tool for artists to remix visual concepts, the researchers suggested. The result could be interesting — perhaps disturbing — abstract images, but the potential doesn’t end there.

“This is about how we use databases to teach computers to learn,” said Jim McGregor, founder and principal analyst at Tirias Research.

“Right now, deep learning is still in its research phase; this is about perfecting the algorithms,” he told TechNewsWorld.

Once it’s perfected, the applications could be unlimited, McGregor added.

“Medical is one, where the computer can consider the CT, MRI, or X-ray and determine what the image might reveal,” he suggested.

Security applications are also possible,” McGregor said.

“It could be used in autonomous vehicles where the AI can take all the data that is being presented and develop algorithms that make self-driving cars possible,” he continued. “It is really about teaching intelligent algorithms for almost anything.”

AI to the Next Level

Visual recognition is just one area of interest. This line of AI research could converge with other advanced computer technologies.

“This goes hand in hand with the Internet of Things,” said McGregor.

“It is more than just connecting everything through the cloud — it’s building the intelligence into devices around us,” he explained. “This is really just a part of all these things that appear to be independent but are in fact very connected.”

Any fears that this could end badly for humanity — as in machines that rise up against their human masters — is probably just science fiction.

“Hollywood may make a lot of money about machines wanting to kill us, but that involves so much more, including advanced robotics,” McGregor explained.

“We’re not even close to that and likely never will be,” he added. “Right now, we’re just at the point where we’re teaching computers to do things better. It was Alan Turing who suggested that it isn’t that machines can’t learn — they just learn differently.”