The encryption vs. tokenization battle sometimes seems as fierce as the war between Pepsi and Coke, or the Cubs versus the White Sox. A lot has been written recently about securing data in the cloud, and the merits of the two methodologies are constantly being debated. The good news is that an argument over which is superior is far better than the alternative — no data protection in the cloud.

Securing data while in storage or in transit is mandatory in today’s business climate. From online retail processing and accessing personal medical records via the Web to managing financial activity and government information, implementing data security to protect — or even hide — sensitive information is becoming the norm.

What Are Encryption and Tokenization?

There are a million definitions out there for both encryption and tokenization, so for the sake of this discussion, here is a brief description of each:

Tokenization is a relatively new methodology created in 2005 to secure specific data that is sensitive by replacing it with a non-sensitive and non-descript value set. Most commonly, the actual sensitive data is stored locally in a protected location or at a third-party service provider. Tokens are used to prevent unauthorized access to personal information such as credit card numbers, Social Security numbers, financial transactions, medical records, criminal records, driver and vehicle information, and even voter records.

With this process, a token is “generated” in a variety of different ways either to match the format of the original data it is hiding or to generate an entirely arbitrary set of values that are then mapped back to the sensitive information. In this scenario, the process consists of encrypting and decrypting in order to make the information readable again.

This approach has gained popularity as a way to secure data without a lot of overhead, because only the sensitive data of the entire package is “tokenized.” For example, let’s say a payload of information that includes Social Security numbers is being processed. Utilizing tokenization technology, the Social Security numbers are isolated and replaced with a token — the abstract unrelated set of numbers — and the package is sent to its destination, where it is replaced with the real Social Security numbers.

In case that still doesn’t make sense, and you’re about to turn to the so-called authority (aka Wikipedia), I’ve saved you the trouble and provided its definition here: “Tokenization is the process of breaking a stream of text up into words, phrases, symbols, or other meaningful elements called tokens. The list of tokens becomes input for further processing such as parsing or text mining.”

Encryption is described as “the process of transforming information using an algorithm to make it unreadable to anyone except those possessing special knowledge, usually referred to as a key.”

Encryption has been around for decades. It has long been used by the military and government to keep their secrets out of the hands of undesirables. Today, it is widely used by commercial applications to keep their data secure whether in motion or at rest.

Encryption is typically implemented in an end-to-end methodology, meaning data is encrypted from the point of entry to the point the processing occurs. The real purpose of end-to-end encryption is to encrypt the data at the browser level and decrypt it at the point the payload reaches the application or database.

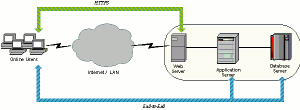

For example, as illustrated in this diagram, users are encrypting data at the start of the process at the browser, and then decrypting the data at the application server or database server.

The Choice Is Yours! Or Is it?

These are the first questions people typically ask: Is encryption or tokenization more secure? Which approach provides the desired level of security? If compliance is an issue or concern, then how does the selected method achieve and maintain compliance? Is there an awareness of the data profile?

The answers to these and many other questions lie within the enterprise’s ability to clearly define the objectives of the business process. Unfortunately, most enterprise IT departments do not have the intimate knowledge needed to make good decisions about what to do with data. The wrong decision related to identifying sensitive data can cost a company millions in lost revenue and legal costs. Yet, the ability to secure data is the most important criterion companies have when deciding what their priorities are.

The ability to secure data when considering cloud computing has been rated as a top priority in recent industry surveys. For example, 85 percent of participants cited “security” as a factor that might inhibit them from deploying cloud-based applications in a Gartner survey of corporate data center managers. Ensuring that data is protected at rest and in motion are top of mind for most IT managers.

There is no argument that end-to-end encryption provides the greatest level of data security and confidentiality, because encryption ensures the data is secure all the way from each end point to the processing destination. Because data is encrypted — and therefore unreadable to anyone while it is in motion — and requires an access key in order to decrypt the payload to make it readable, the possibility of someone intercepting the payload and accessing the sensitive data is minimal.

However, there is an element of successful encryption that many companies ignore — and that is the management of the encryption keys, which may be difficult to administer or control. Also, for some businesses, encryption may be overkill, or they may not be willing to sacrifice any potential impact on performance for end-to-end protection. The willingness to sacrifice some level of performance to achieve greater data protection seems to be an interesting trade-off to consider.

With tokenization, data is neither stored nor sent in any form to its destination. One of the key benefits of tokenization is ease of use, as you do not need to manage encryption keys. Tokenization is becoming more popular as companies figure out how to make the best use of the technology.

As tokenization becomes better known, there will no doubt be more implementations, primarily because tokenization provides greater flexibility in enabling a business to select (and thereby limit) the data needing to be protected, such as credit card numbers. However, in order to do this successfully, a company must be able to identify the specific data to encrypt, which requires intimate knowledge of its data profile.

The choice really is yours to make. The best advice any industry analyst or vendor can provide to enterprise IT organizations attempting to work through their data security strategy is to help identify what data needs to be secure, and how the data will be used. The business processes being implemented will also have a bearing on which approach a company should take.

It is not necessary to choose one technology over the other. Adapting a hybrid approach — based on whether the sensitive data can be selected and identified, and whether the importance of securing and locking down all the data is critical and necessary — will determine whether a full encryption or tokenization implementation is right for your firm.

Stuart Lisk is senior product manager at Hubspan.

Is it really either/or? You hint at a hybrid approach at the end of the article, but I’d argue that this is a permanent solution rather than a way of A/B-ing both products. Obviously the cost of using both may be too high for some businesses, but the plain fact is that only a multi-pronged approach will protect against all security holes (due to issues with browser implementation, neither ssl nor two-factor authentication are infallible). As an evangelist for VeriSign’s extended validation ssl program — which requires a more robust background check than regular certs and in turn protects sites with an unspoofable green url bar — I’m often discussing how these products can be paired together. Also: Don’t forget about problems with malware, which neither ssl nor tokenization will properly safeguard against. Point being: security is a many splendored thing.