Google joined the ranks of fellow cloud service providers Amazon and Microsoft on Tuesday with the announcement of custom silicon for its data centers.

Google’s Axion line of processors represents its first Arm-based CPUs designed for the data center. “Axion delivers industry-leading performance and energy efficiency and will be available to Google Cloud customers later this year,” Amin Vahdat, the company’s vice president and general manager for machine learning systems and cloud AI, wrote in a company blog.

According to Google, Axion processors combine the company’s silicon expertise with Arm’s highest-performing CPU cores to deliver instances with up to 30% better performance than the fastest general-purpose Arm-based instances available in the cloud today and up to 50% better performance and up to 60% better energy-efficiency than comparable current-generation x86-based instances.

Google is the third of the big three cloud service providers to develop their own CPU designs, explained Bob O’Donnell, founder and chief analyst with Technalysis Research, a technology market research and consulting firm in Foster City, Calif.

“All these companies want to have something that’s unique to them, something they can write their software to run on and to do things more power efficiently,” he told TechNewsWorld.

“Data center power usage is one of their greatest costs, and Arm designs are generally more power efficient than Intel,” he continued. “Google’s not going to get rid of Intel, but Axion gives them a new option, and for certain types of workloads, it’s going to be a better alternative.”

There are also market considerations. “Everyone wants an alternative to Nvidia,” O’Donnell said. “Nobody wants a company that has a 90% market share unless you’re the company with the 90% share.”

Bad News for Intel

Benjamin Lee, an engineering professor at the University of Pennsylvania, explained that Google can customize its hardware components for greater performance and efficiency by designing its own CPU.

“Much of this efficiency comes from building custom controllers that handle important computation for security, networking, and hardware management,” he told TechNewsWorld. “By handling the bookkeeping computation required in data center servers, these custom hardware controllers free more of the CPU for user and customer computation.”

The use of Arm processors in the data center is unfortunate news for Intel, which has historically dominated the data center market with its x86 processors, he noted.

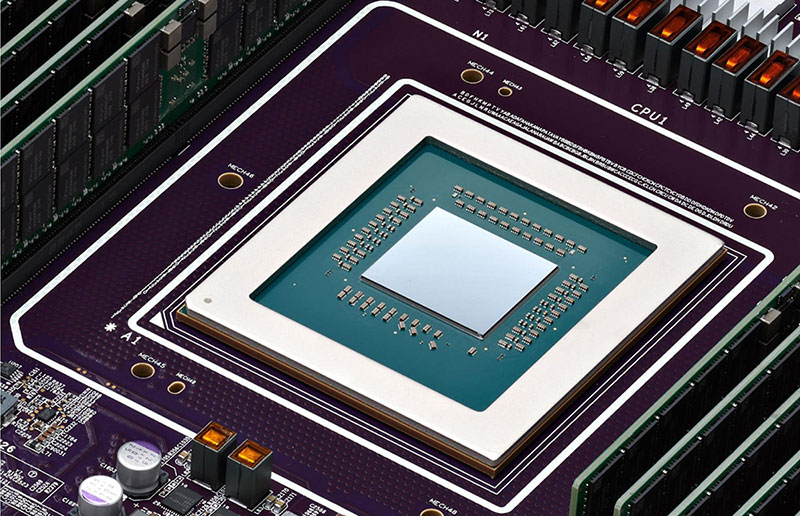

Google’s Axion processor (Image Credit: Google)

“This announcement shows an accelerating transition away from x86 architectures and more towards Arm for the server market, which is the ultimate prize for chip companies,” added Rodolfo Rosini, co-founder and CEO of Vaire, a reversible computing company with offices in Seattle and London.

“I suspect Arm will get more out of this announcement than Google in the long run,” he told TechNewsWorld.

Rise of Proprietary Silicon

Axion is another example of major players — such as Apple and Tesla — investing in their own chip designs, observed Gaurav Gupta, vice president for semiconductors and electronics at Gartner, a research and advisory company based in Stamford, Conn.

“We see this as a major trend,” he told TechNewsWorld. “We call it OEM Foundry Direct, where OEMs bypass or take assistance with design firms and go directly to the foundry to get their silicon. They do this for better cost and roadmap control, IP synergies, and such. We will continue to see more of this.”

With this announcement, Google is putting its substantial financial and technical weight behind a market trend for semiconductors — like CPUs and accelerators — to be designed according to how they are going to be used, explained Shane Rau, a semiconductor analyst at IDC, a global market research company.

“No single CPU or accelerator can handle all the workloads and applications that Google’s cloud customers have, so Google is bringing another choice for CPU and AI acceleration to them,” he told TechNewsWorld.

TPU v5p General Availability

In addition to the Axion announcement, Google announced the general availability of Cloud TPU v5p, the company’s most powerful and scalable Tensor Processing Unit to date.

The accelerator is built to train some of the largest and most demanding generative AI models, the company explained in a blog. A single TPU v5p pod contains 8,960 chips that run in unison — over 2x the chips in a TPU v4 pod — and can deliver over 2x higher FLOPS and 3x more high-bandwidth memory on a per-chip basis.

“Google’s development of Tensor SoCs for its Pixel phones and the advancement of more powerful Tensor Processing Units for data center use underscore its commitment to accelerating machine learning workloads efficiently,” observed Dan deBeaubien, head of innovation at the SANS Institute, a global cybersecurity training, education and certification organization.

“This distinction highlights Google’s approach toward optimizing both mobile and data center environments for AI applications,” he told TechNewsWorld.

Abdullah Anwer Ahmed, founder of Serene Data Ops, a data management company in Dublin, Ohio, added that Google’s TPU adds another option for lower-cost inferencing to Google’s cloud.

Inference costs are what users pay to run their machine-learning models in the cloud. Those costs can be as much as 90% of the total cost of running ML infrastructure.

“If a startup is already using Google Cloud and their inferencing costs start to overtake training costs, it may be a suitable option to move to Google TPUs for a cost reduction, depending on the workload,” Ahmed told TechNewsWorld.

Promoting Sustainability

In addition to improved performance, Google noted that its new Axion chips will contribute to its sustainability goals. “Beyond performance, customers want to operate more efficiently and meet their sustainability goals,” Vahdat wrote. “With Axion processors, customers can optimize for even more energy efficiency.”

“Data centers use a lot of power since they run 24/7. Reducing power consumption does help contribute to sustainability,” Ahmed said.

“The Arm-based CPU is much more energy efficient than the x86,” added O’Donnell. “That’s a huge deal because energy costs are enormous in these data centers. These companies have to work to reduce that. That’s one of the reasons they’re all leveraging Arm.”

“As the demands for compute go higher, you can’t do that forever because there’s only so much capacity in the world, so you have to be smarter about it,” he added. “That’s what they’re all working to do.”