An online map of surveillance cameras in New York City is in the works.

The map project is part of a larger campaign called “Ban the Scan,” sponsored by Amnesty International, which is partnering on the initiative with several other tech advocacy, privacy, and civil liberties groups.

“We need a map so citizens can have a sense where they are being observed by the NYPD as they go about their everyday lives,” explained Michael Kleinman, director of Amnesty USA’s Silicon Valley Initiative.

“It also gives the citizens of New York, and more broadly people who are concerned about the issue of facial recognition, a sense of just how pervasive this kind of surveillance is,” he told TechNewsWorld.

Data for the map will be “crowdsourced” by volunteers. This spring the volunteers will be able to roam the streets of Gotham and identify surveillance cameras using a software tool that runs in a Web browser. Data gathered by the tool is integrated with Google Street View and Google Earth to create the map.

“To surveil the population as a whole, you don’t need a special facial recognition camera,” Kleinman explained. “As long as law enforcement has the imagery from that camera, they can conduct facial recognition at the back end.”

“That’s why we say that in any city you can think of, facial recognition is just one software upgrade away,” he continued.

“It’s not an issue of I’m going to reroute my daily commute away from facial recognition cameras,” he added. “We can push the New York City Council to ban police use of this technology.”

Measures banning or restricting the use of facial recognition have already been adopted in Boston, San Francisco and Portland, Ore.

Game Changing Technology

Yuanyuan Feng, a researcher at the Institute for Software Research at Carnegie Mellon University in Pittsburgh explained that there’s a transparency problem with the way the technology is used now.

“There’s no transparency about the retention time of the data, what it’s being used for, and what are the sharing practices,” she told TechNewsWorld.

Most police departments are secretive about this, not only to the public, but to individuals that are arrested, added Jake Laperruque, senior counsel for the Project on Government Oversight, a government watchdog group in Washington, D.C.

“Most departments take the position that if it isn’t introduced as evidence in a court case, they don’t have to talk about it at all,” he told TechNewsWorld.

That stance seems to belie the significance of the technology to law enforcement.

“This isn’t just the latest model of car or walkie talkie,” Laperruque said. “This is game-changing technology for how policing works.”

Politicized Technology

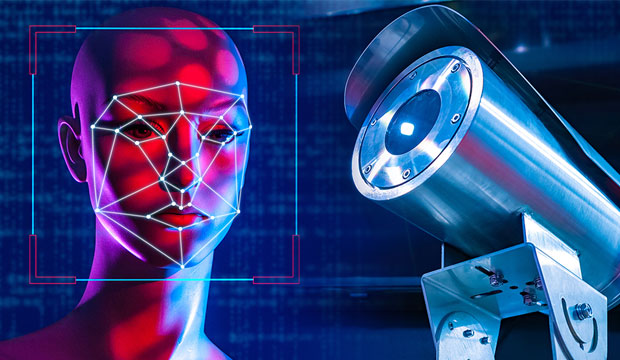

Karen Gullo, an analyst with the Electronic Frontier Foundation, a San Francisco-based online rights advocacy group, explained that facial recognition is one of the most pervasive and intrusive of all surveillance technologies.

“It’s being utilized by law enforcement and government entities with little to no oversight and limitations,” she told TechNewsWorld.

“Face surveillance is becoming an all-encompassing tool for government to track where we are, what we are doing, and who we are with, regardless of whether we’re suspected of a crime or not,” she continued.

“Programs that provide the public with information about how and where surveillance tools are being used to spy on people…are an important check on law enforcement and give citizens knowledge to demand accountability and public input,” she added.

Facial recognition has become a politicized technology, argued George Brostoff,CEO of Sensible Vision, a face authentication company in Cape Coral, Fla.

“When something becomes politicized, everything becomes black and white,” he told TechNewsWorld. “There are few things in this world that are black and white.”

“People don’t want to be tracked,” he said. “That’s what should be discussed, not just facial recognition. What does the government know about me? How does the government use my information, including my face? Those are things that should be discussed in general.”

Racial Bias

One of the chief criticisms of facial recognition systems is not only are they inaccurate, but biased.

Gullo noted that there are studies and research that show facial recognition is prone to errors, especially for Black Americans.

A 2012 study co-authored by the FBI, for example, showed that accuracy rates for African Americans were lower than for other demographics.

In fact, Gullo continued, the FBI admitted in its privacy impact assessment that its system “may not be sufficiently reliable to accurately locate other photos of the same identity, resulting in an increased percentage of misidentifications.”

In another study by MIT, she added, there were error rates of 34 percent for dark women compared to light-skinned men in commercially available facial recognition systems.

“This means that face recognition has an unfair discriminatory impact,” Gullo said.

She added that cameras are also over-deployed in neighborhoods with immigrants and people of color, and new spying technologies like face surveillance amplify existing disparities in the criminal justice system.

Hampering Law Enforcement?

Since those studies were performed, facial recognition technology has improved, Brostoff countered, but added that even better technology can be misused. “The question is not is facial recognition biased, it’s is the implementation biased?” he asked.

He added that not all facial recognition algorithms are biased. “The ones tested had an issue,” he said.

“Even in those tested,” he continued, “not all of them had inaccuracies solely due to a bias. They were also due to their settings. The percentage for a match was set too low. If a match is set too low, the software will identify multiple people as potentially the same person.”

“Some of it was the quality of images in the database,” he added. “If an algorithm doesn’t have a filter to say this image is too poor to be accurate, then a bad match will result. Now, 3D cameras can be used to generate depth information on a face to produce more detail and better accuracy.”

When facial recognition bans are proposed, law enforcement’s response is that removing the technology from its toolbox will hamper their efforts to keep the public safe. Critics of the technology disagree.

“They can use other tools to track down criminal suspects,” observed Mutale Nkonde, CEO of AI For the People, a nonprofit communications agency and part of Ban the Scan Coalition.

“For example, during the riot at the Capitol, the FBI used cell phone data to find out who was there and to create a no fly list,” she told TechNewsWorld.

“The idea that by not using facial recognition, law enforcement will not be able to do their job requires quite a leap of faith when you consider all the power and all the resources law enforcement already has,” Kleinman added.

“Our concern should not be that law enforcement has too little power,” he continued. “Our concern should be what does the expansion of the power of law enforcement mean to all of us?”

“The argument that if we can’t do X, then we will be crippled is an argument that can be used to justify an endless expansion of law enforcement power to surveil us,” he added.