The SIGGRAPH conference is a year event that focuses on the latest in computer graphics and other interactive arts. It is a multidiscipline show, mixing computer imaging with other physical creations. It is also a crossroads for the film, video game, commercial, research and education industries. It has a forward-looking vision while celebrating the recent and not-so-recent past.

Although the conference is not a typical launchpad for new products, companies often use it for technology-related debuts. At this year’s event, there were product introductions from AMD, Dell, HP, Nvidia and others.

Impressive Entries

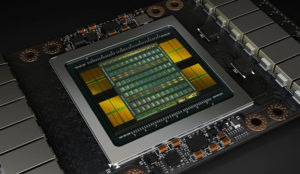

AMD used the event as a launch platform for its workstation-class processor, Ryzen Threadripper, and for its latest graphics processor, the Vega 10. AMD also rolled out RadeonPro SSG, a card designed for 4K and 8K video editing, with 2 TB of SSD memory. The SSG card can load more video onto the graphics card than any other graphics card for fast processing.

While the Ryzen Threadripper had been announced earlier, the event revealed the price, clock speeds and availability. Vega 10 is AMD’s new entry in the US$350 to $500 graphics card market. It uses second-generation high bandwidth memory (HDM-2), which is designed to reduce power and board footprint while offering more bandwidth than GDDR (graphics double data rate) memories. It allows AMD to create shorter PCIe cards with outstanding performance.

Dell was at SIGGRAPH to celebrate 20 years in the workstation business. What started out as a beige box workstation with a Pentium 2 processor and an Elsa graphics card has endured, while other workstation vendors of the time, like SGI and Sun, are gone. The market is now left to Dell and HP to duke it out for the lead.

HP rolled out some new products, including a cool industrial-quality backpack PC for untethered virtual reality, the Z VR Backpack PC.

I got to try the Z VR backpack setup while testing a wide field of view (FoV) head-mounted display (HMD). The backpack PC is designed for commercial applications and uses Intel Xeon processors and Nvidia Quadro graphics cards. The Z backpack, which includes a belt with hot-swappable batteries, was comfortable to wear. There’s also an option desktop dock to allow it to be used as desktop PC.

The HMD by StarVR is a joint effort by Acer and Starbreeze Studios, and it also targets commercial applications (at least for now). The headset offers a 210 degree field of view, which compares with FoV of about 100 horizontal degrees and about 110 vertical degrees with a stock HTC Vive.

The HMD uses two 2516×1440 pixel OLED displays mounted in the headset for a total resolution of 5K. The headset is not ready for home use, but it is targeting training, arcade and amusement park experiences.

Nvidia focused its events on software products for developers. In keeping with its corporate focus on machine learning and artificial intelligence, it took AI to graphics with a number of products. Its AI for facial animation, showcased in a demo by Remedy games, used voice alone to animate a face. The company used AI for better antialiasing of images by understanding motion using temporal information.

The company also showed an AI light transport that uses machine learning to understand the path in ray tracing to accelerate the rendering. It quickly reduces noise in a ray traced picture as the many points of lights per pixel are resolving, by using machine learning training on reference images to project the possible correct pixel.

The fun part of the Nvidia booth was a robot you could train in VR. You could then apply your training using a real robotic arm. The trained robot Isaac would try to match dominos with the human.

VR Is the Star at SIGGRAPH

SIGGRAPH was heavily oriented toward VR and, to a lesser extent, augmented reality. The organizers had created a VR Theater that quickly sold out. Other experiences were open to all attendees at the VR Village.

An interesting experience was a 360-degree surround short presentation by Lytro Immerge, which showcased its capability to synthesize multiple light-field cameras to create a virtual concert that had real depth.

It was possible to move around and see parallax around a singer and sense a cathedral-filling rendition of Leonard Cohen’s Hallelujah with a background chorus. While you had to stay in a bounded area to get the VR experience, it was a very immersive experience — with far more depth than a 360-degree video today. (See the making of the Lytro VR experience.)

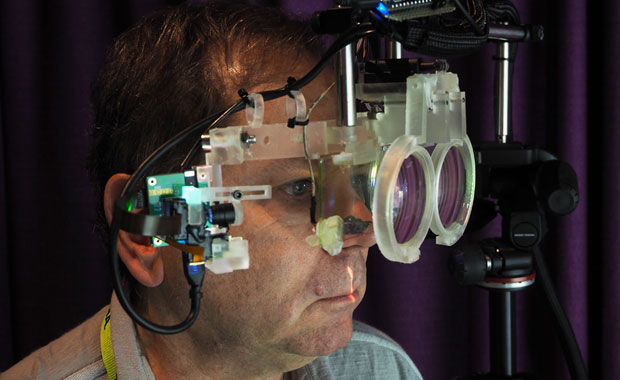

I also had the opportunity to try a VR headset from Neurable with brain activity sensors that used eye tracking and brain activity to select an object in virtual space.

I was skeptical initially, as the training part of the experience was hard to distinguish from a preplanned demo. However, once the training session was over, I did believe I could think “grab” and the VR object would respond.

The HMD required eye tracking to sense which object you wanted to grab, but the brain activity did seem to initiate the VR action. This was done with completely noninvasive EEG sensors.

Another unique experience was a chance to see Michangelo’s David up close with the il Gigante virtual reality exhibit sponsored by Epic Games. The statue had been scanned to the mm level of detail in 1999 (roughly a billion points).

It was reconstructed on a workstation, and using the VR headset you could engage in an extremely close exploration of the 17-foot tall (5-meter) statue on a virtual scaffold, viewing the sculpting marks among other details.

The most realistic virtual human was the subject of “Meet Mike.” Mike Seymour from the University of Sydney had been scanned in detail to create a hyper-realistic 3D version of his head. The real Mike would speak while a camera captured his facial movements and animated the virtual Mike in real time.

Using the rig, Mike talked to the audience and interviewed other people in VR space. The project is designed to simulate a future of highly interactive, photorealistic avatars.

The most interesting part of SIGGRAPH for me was seeing the bleeding-edge technology research projects that were housed in the Emerging Technology area. Many were still at the science project stage, but they gave an indication of what to expect in the future.

I tried out an Nvidia-sponsored project on variable focus lens for AR or VR that would adjust your focus to keep the object your eyes were looking at in focus. It’s still early research, but the goal is to create correct depth focusing for virtual objects.

It’s in these cutting-edge projects that we can see how VR can be used to create more personal empathy than film or TV. There’s also an intersection of movies and games in both VR and flat displays.

For example, EPIC Games was working on a real-time rendering playback using a game engine at one session. The fact that SIGGRAPH is part academic and part commercial leads to more experimental content and a better view of what is possible.