It is starting to worry me how little the responses by tech firms will do to fix the problem of extreme views instead of just driving them underground. A good deal of the reason for this is the excessive focus firms now have on how they are run.

Companies tend to be run tactically, with officials more likely to make decisions that will seem to make a problem go away within a quarter but that do not deal with the cause of the problem. For instance, stock buyback programs have become a near constant, and this practice does push up stock price — but it does nothing to increase company value, improve competitiveness, or grow the customer base.

With extremists, you either want to change views or you want to move them where they can do no harm to the firm. Shutting down communications, with some exceptions, is a bad idea when it comes to extreme views, if your goal is to reduce adherence to them along with related disruptions and violence.

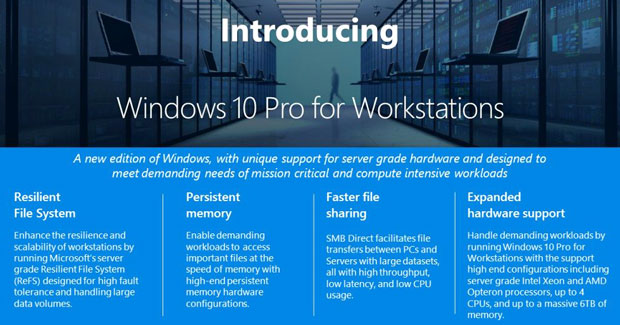

I’ll close with my product of the week: Windows 10 Pro for Workstations, which fixes a mistake Microsoft made in the 1990s.

Killing Discussion

Two actions caught my attention last week: Discord shutting down communities tied to the alt right; and Facebook shutting down internal chat groups for crossing HR lines about harassment.

These actions followed Google’s foolish firing of an employee who appeared to have fringe views but also seemed to have substantial support within Google, effectively making him a martyr.

Discord’s move at least made sense, though it would have no long-term impact on the behavior. Facebook’s and Google’s moves were wrongheaded, effectively making the problem both less visible and worse.

Discord provides a forum for people to discuss their political views. It is a service largely used by people who don’t work for Discord. Being known as a service that provided support for extremists undoubtedly created brand risk. It might have been driving larger groups from using the service for fear of being connected to fringe groups or being boycotted by folks who objected to what the extremists said.

In Facebook’s case, it was employees who were behaving badly. Certainly, they created potential hostile workplace legal problems. In short, Facebook could be sued by other employees using the posts as evidence. Thus, justifying the shutting down of the discussion group does have a solid foundation in litigation mediation.

However, neither response addressed the bad behavior. This is more problematic for Facebook, because the offending employees remained employed, and the firm simply lost one method for identifying them.

Coverup vs. Correction

I was on a Web page last week that promised a low-cost way to fix the check engine light on your car. The picture was of a person removing the fuse that powered the light. It reminded me of my second car. I was driving fast in 110-degree weather and the overheat light came on, so I pulled over and it went out.

I continued, and after driving about 30 miles, my engine came apart explosively. What had happened was the light bulb had burned out, but I thought the problem had gone away. Instead of realizing the car was low on water — an easy fix — I ruined the engine and ended up losing the car. If you eliminate the visible indicator of a problem, it can get worse — you’ve just hidden it.

What Facebook did was simply remove the evidence of the bad behavior and a place to look for it. Now it appears that it doesn’t have a problem, but the harassing employees remain in place. Given that Facebook hired them in the past, it likely will hire more of them in the future. The bad behavior could quietly increase, and Facebook would simply be less aware of it.

As for Discord, it had a more difficult choice to make, because the folks behaving badly didn’t work for the company. It can wash their hands of the groups, but by giving up contact it also loses its ability to try to fix the underlying behavior. It goes from being a place where people can discuss political issues broadly to being one that censors, which also hurts its brand image.

Discord’s move made the issue someone else’s problem so I can understand it in that sense. However, Facebook still has the problem — it just has one less tool to deal with it.

A Better Path

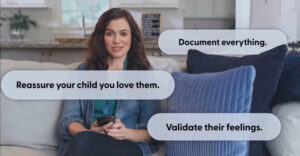

I believe that you can change minds through engagement. If you cut off engagement, you cut off influence. You can’t change someone’s mind if you don’t engage that mind.

For instance, when you had an argument with your parents, which worked better — them engaging with you about why you were wrong, or them telling you to shut up? In my own case, when the latter happened, I believed more firmly that I was right and that they were misbehaving. Shutting me up didn’t change my mind — and as I grew older, it didn’t shut me up either.

If we watch engagements on the Web, what seems clear is that those who are good at thinking on their feet tend both to win the engagement and — often, though not always — shut down the troll. It is a skill you see with a lot of comedians, but there are other folks who are naturally good at debate, can think quickly, and have a broad set of relevant facts at their fingertips.

A better path for Discord might have been to hire some skillful communicators and have them enter the discussion. Ban individuals who cross clear lines, like threatening violence, but leave up the forum with the goal of changing minds. The goal would not be to turn conservatives into liberals, but to bring the discussions back to facts and problem solving, and away from abusive behavior and real fake news.

Particularly in the case of Facebook, employees who are way over the line either need to be put into a psychiatric program or terminated, not forced underground. These are the kinds of people who can act out physically, and making them invisible, much like my burned-out warning light, only puts off the problem and may make any eventual outcome worse.

Wrapping Up

When people behave badly, we need to focus on correcting the behavior — not making it someone else’s problem, and certainly not driving the behavior underground. Many of the efforts to create diversity and remove racism have focused on removing the visible representations of it, not on changing minds. Forty years after aggressive attempts to end discrimination and racism in the U.S., both seem stronger than ever.

You don’t make an illness go away just by dealing with the symptoms — you need to come up with a plan to eliminate the disease. Diversity of opinion and people who question facts are necessary, because better decisions are best founded on real facts and not false beliefs.

Take North Korea. We’ve had a long-term policy of non-engagement, and it has gone from being an annoyance to becoming a nuclear power. That is not a mistake we want to keep making.

The tech industry, beyond all others, should be focused largely on finding facts — and not on staying aligned with what now is politically correct. In short, we should want to make things better — not run and hide from serious problems that we have the tools to correct. This is our moment, and we seem to be screwing it up.

We need to support free speech, because dialogue is a better path to the truth than authority. If you are talking, you can change hearts and minds. When dialogue is shut off, the path to resolution tends to be far more violent. Perhaps we should be more eager to emulate Beatrice Hall than Joseph McCarthy.

One of the biggest mistakes Microsoft made last decade was to take a platform designed for workstations and servers and try to blend it for all users. The goal was to cut costs, but the result was far more aggravation for those who didn’t need the more robust platform. The server platform seemed to lose its way, and those who really needed a workstation platform were forgotten. The server problem eventually was corrected — but until recently, those who needed workstation-level performance remained forgotten.

Well, given that an engineer now runs Microsoft, workstation users apparently are forgotten no longer. There is now a special version of Windows, Windows 10 Pro for Workstations, that is particularly for them.

There are four core elements that make this product interesting. One is support for a new kind of memory, NVDIMM-N, which is high speed non-volatile memory. Basically, it is a blend of high speed system memory and flash, potentially giving a massive performance advantage with large files (I’m thinking it would be wicked with some games as well).

Second is a new file system, ReFS, that is particularly resilient with regard to data corruption with large files.

Third, there is support for higher-speed file sharing, which is critical for large files.

Finally, you can have four CPUs, including server grade CPUs if you need even more processing power. (I now have in my head a four CPU AMD Threadripper system that is water-cooled becoming the new ultimate system build.)

Last week I spoke about Hollywood’s increasing ability to emulate movies to ensure success before spending millions making bad ones. This is the kind of workstation we need to do that, but it also will be used to build the cars, buildings and software of tomorrow — not to mention help our financial analysts make sure our stock portfolios support our eventual retirement.

Those who use workstations are the people who are designing and building our future. They need and deserve more focused support. Windows 10 Pro for Workstations provides that support, and it is my product of the week.

"Many of the efforts to create diversity and remove racism have focused on removing the visible representations of it, not on changing minds."

Very good thought!

However, does a tech company have the resources, or moral authority to change people’s minds–and hearts? Rob, if even your parents tried but couldn’t change your thinking, how can some unconnected-to-you corporation whose bottom line is profit (and not psychological or spiritual counseling) have any real influence on anyone’s beliefs?

It seems that you’re proposing that that tech companies can help by becoming educators, and this is a noble idea. But as you point out, "Forty years after aggressive attempts to end discrimination and racism in the U.S., both seem stronger than ever." Unfortunately, the progressive idea of education, coupled with legislation, haven’t done it. The problem is deeper.