The world of high performance computing (HPC) is expanding every day, particularly in the fields of geosciences, molecular biology and medical diagnostics, where scientists are increasingly turning to supercomputers to crunch massive amounts of data via complex simulations and applications.

Graphics processing unit (GPU) solution provider Nvidia has developed a new line of GPU-focused solutions that transfer many of the calculations required by scientific and graphics-heavy applications to the GPU rather than the central processing unit (CPU) found in more traditional PCs. Moving computations to the GPU isn’t unprecedented, particularly for the crunching of graphical effects in games, but Nvidia says the scale of company’s new Tesla-branded solutions is groundbreaking.

“Today’s science is no longer confined to the laboratory — scientists employ computer simulations before a single physical experiment is performed. This fundamental transition to computational methods is forging a new path for discoveries in science and engineering,” said Jen-Hsun Huang, president and CEO of Nvidia. “By dramatically reducing computation times, in some cases from weeks to hours, Nvidia Tesla represents the single most significant disruption the high-performance computing industry has seen since Cray 1’s introduction of vector processing.”

Faster and Faster

Using Tesla-based GPU processing solutions, scientists said they are already seeing massive increases in processing speed, and they’re getting it from systems much smaller than supercomputers.

“Many of the molecular structures we analyze are so large that they can take weeks of processing time to run the calculations required for their physical simulation,” explained John Stone, senior research programmer at the University of Illinois Urbana-Champaign.

“Nvidia’s GPU computing technology has given us a 100-fold increase in some of our programs, and this is on desktop machines where previously we would have had to run these calculations to a cluster. Nvidia Tesla promises to take this forward with more flexible computing solutions,” he said.

The Guts

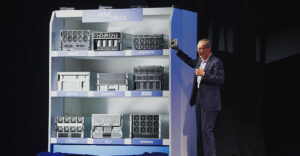

There are three primary components of the Tesla family of GPU computing solutions.

First, there is the Nvidia Tesla GPU Computing Processor, which is a dedicated computing board that scales to multiple Tesla GPUs inside a single PC or workstation. The Tesla GPU features 128 parallel processors, and Nvidia says it can deliver up to 518 gigaflops of parallel computation. It can be used in existing systems partnered with high-performance CPUs.

Second, the Nvidia Tesla Deskside Supercomputer is a scalable computing system that includes two Nvidia Tesla GPUs and attaches to a PC or workstation through an industry-standard PCI-Express connection. With multiple deskside systems, Nvidia says, a standard PC or workstation is transformed into a personal supercomputer that can deliver up to eight teraflops of compute power to the desktop.

Third, the Nvidia Tesla GPU Computing Server is a 1U server housing up to eight Nvidia Tesla GPUs, Nvidia says, that contain more than 1,000 parallel processors that add teraflops of parallel processing to clusters. This server model is designed to fit into datacenters — for example, Nvidia says it’s targeting customers in the oil and gas industries who have could now add the Tesla GPU Computing Server to their own datacenters.

Roadblocks to Adoption?

Tesla works on the premise of parallel computation, where many calculations are occurring at the same time. In order to take advantage of Tesla, however, applications must be created with parallel computing strategies in mind, and that’s where Nvidia’s CUDA software development solution comes in. CUDA includes a C-compiler for the GPU, debugger/profiler, dedicated driver and standard libraries. CUDA simplifies parallel computing on the GPU by using the standard C language to create programs that process large quantities of data in parallel.

“We’ve tried to remove as much [of the roadblock to adoption] as possible and make CUDA available on all the GPUs, and that was a conscious strategy of trying to make development easy,” Andy Keane, general manager of GPU Computing for Nvidia, told TechNewsWorld.

Nvidia is currently working on education and developer outreach programs to help drive adoption of Tesla solutions, he added.

Built to Take Advantage of Tesla

Here’s an example of how to think about GPU and CPU applications, according to Keane: “The way a parallel application is built with CUDA … if you take a standard CPU application, you’re moving data around, you compute it, you integrate it back with some database and then send it off. That whole application remains the same. What we tell developers to do is, you take that computational element that’s in the middle of your application, the piece that can be parallelized — move that piece to the GPU,” Keane explained. “You’re not moving the whole application over.”

The Game-Changing Premise

“This is Nvidia trying to redefine a PC and make themselves more relevant to it,” Rob Enderle, principal analyst for The Enderle Group, told TechNewsWorld.

“In the current market, the way it’s evolving, you’ve got Intel and AMD as the two major platform owners, with Intel being the bigger party. What both of those guys are pretty much saying is that they are going to combine the CPU and the GPU into one, and in three years make Nvidia and the GPU irrelevant,” he explained.

“What Nvidia is doing is creating a counter-argument so that with Tesla and their GPU technology, the CPU is trivial. And much of the value of the system will come from what Nvidia is providing and much less of the value will come from what Intel and AMD is providing … so that Nvidia will stay relevant,” he added. “The underlying premise is that this will eventually redefine the PC … and drop into the general desktop around the time that Intel and AMD will have their converged products. And Nvidia’s hope — and I’m not convinced it’s going to work — is that this Tesla product, which is kind of a return of the coprocessor, will have enough momentum behind it to hold off the other two players.”

In the Now

Regardless of this potential processing showdown, Nvidia stands to fill a growing niche for scientific computing code.

“The Tesla brand is not a flavor of GPU that’s intended for every single customer in the world to use,” Keane noted. “It’s really targeted for a commercial customer who wants to do high-end computing.”

Tesla solutions will be available in August, and while Nvidia is still vague on pricing, the company says Tesla solutions will be priced less than comparable supercomputer-based service charges.