There’s no debate about the need for centralized network monitoring. The potential benefits are numerous, including improved

- end-use productivity,

- network performance,

- application performance and

- security and compliance.

There are three main approaches to network monitoring: Simple Network Management Protocol (SNMP), flow records and packet-based. There are pros and cons to each when considering issues like data granularity (as high level as just the source and destination, or as low as every bit in every packet), data accuracy (sampled methods can miss critical data and thus be less accurate, whereas methods which interrogate every packet are 100 percent accurate), overhead (how much additional traffic is added to the network and competition for processing power and memory on the network devices themselves) and cost.

A Look at SNMP

SNMP is best used in identifying and describing system configurations. It monitors network-attached devices for basic high-level conditions like up/down, total traffic (bytes, packets), and number of users.

As SNMP-based monitoring uses polling (a periodic request is made at a specified interval and the response gives the current state of the system), it has a heavy bandwidth impact because lots of polled information is running back and forth on the network that is being monitored. Though SNMP-based network monitoring provides a basic level of useful information, it is not the best approach for network troubleshooting and root-cause analysis.

Flow Records

Flow records have become the default element used in centralized network monitoring. A “flow” is a sequence of packets that has the following seven identical characteristics: source IP address, destination IP address, source port, destination port, layer 3 protocol type, type of service (TOS) byte, and input logical interface. While flows are a defined element, “flow records” are analytical results that vary by overall standard, vendor, and configuration. The most common standards for flow records are NetFlow, IPFIX, Sflow, and Jflow.

With basic flow analysis, packets enter the switch or router where they are sampled and from which flows are determined. Flow records are then compiled and exported to a flow collector, where they are stored and subsequently analyzed by flow analysis software.

Flow-based data fills the gap that SNMP leaves by providing specific data on network usage through a device, expanding on simple measurements like overall throughput and providing specific (though statistical) data on each flow (IP to IP conversation) that is passing through the network device. This yields more detailed network statistics than SNMP and provides very good data on the overall health of the network, but it does come at a price. Flow-based analysis relies heavily on the same equipment being used to control network traffic, the routers and switches themselves, and on busy networks conflicts for hardware resources like processing power and memory can result. It is the flow analysis that loses when conflicts occur. While it does allow for some troubleshooting, like identifying users who are hogging bandwidth, for example, it does not include any payload information (described below), nor are the packets saved, limiting one’s ability to troubleshoot the network intelligently.

Packet-Based

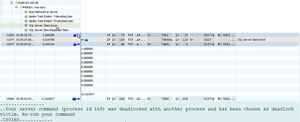

In a packet-based approach to network monitoring, software and/or computer hardware intercept packet traffic passing over a digital network or part of a network. Often called a “packet sniffer,” it captures each packet as it flows across the network. Eventually, the packets are decoded and analyzed according to the appropriate Internet Engineering Task Force (IETF) RFC (Request for Comments) or other specifications.

As all packets are captured (unlike flow records, which rely on statistical sampling), packet-based network monitoring provides information that is 100 percent accurate for each flow. Unlike SNMP (based on polling), the packet-based approach has a minimal network impact because all analysis is done locally at the point of capture, reducing substantially the amount of information that needs to traverse the network. Also, the data available in a packet are far more comprehensive than the seven elements that make up a flow, so analysis can be performed to a much greater degree, leading to not only network-based analysis but application-level analysis as well. And since the packets can be stored (for a reasonable amount of time, depending on overall network usage and disk space) problems can be diagnosed immediately. There’s no need to attempt to reproduce the problem because the packets that caused the problem are instantly available for analysis.

Generally speaking, you want to use the appropriate monitoring technology for the appropriate need. If you just need to check the status of a device, etc., then SNMP may be all you need. It is very cost-effective, but it only tells you the status of the node or device, not necessarily what it is doing on the network. If you are interested in sampled high-level information about who is talking to whom, approximately how much traffic they are generating or receiving, etc., then flow-based analysis may be fine. Flow-based analysis options can get expensive, as they are often licensed by the number of nodes or interfaces that are being monitored. Lastly, if you need all the detail about what is happening on the network, as well as possibly being able to go back in time to prove what happened on the network, then a packet-based solution would be best. Packet-based analysis can also get costly if there are a large number of independent network segments that each need to be monitored.

Of these three options, often there is no single solution that is best. In many cases, a solution based on two or more of these methods provides the best results. In most multi-solution environments, SNMP is used to keep track of the status of end nodes (up, down, CPU or memory usage, etc.), while flow-based analysis is be used on extremities where the number of devices and ports are lower (keeping costs in check) to show which nodes have the most network activity at a high level. Packet-based solutions round out the capabilities, providing all the data necessary to be able to prove exactly what happened, see every bit of every packet, and watch for anomalies in network traffic and behaviors. This often occurs at both the core of the network as well as the Internet-facing links, and any network segments that have high-priority traffic that needs to be monitored in the most granular fashion possible. Packet-based solutions see everything on the wire, so they are the right solution for not only day-to-day issues and information, but also regulatory, compliance and security-related network event tracking.

Complementary to the packet-based approach to network monitoring is the concept of payloads. What is the payload? The payload of a packet is often the linkage between the networking information and the application information. Without the payload one may know that there’s a problem on the network, like slow response time between a server and a client, but packet header information (supplemental data placed at the beginning of a block of data being transmitted) and timing measurements cannot reveal anything more. However, tying packet information together with the payload information can complete the story.

In closing, the question organizations face with respect to network monitoring is not whether they should, but what approach should they take. Whether it is an SNMP, flow records, or packet-based approach depends on each organization’s business and operational needs.

By doing the appropriate due diligence at the outset — considering issues like data granularity, data accuracy, overhead and cost — organizations will save themselves a lot time and headaches in the long term.

Jay Botelho is director of product management at WildPackets.